The field of computer science encompasses various aspects of computing, including data protection and error mitigation. One crucial concept in this realm is redundancy, which plays a significant role in ensuring availability, reliability, and fault-tolerance in computing systems. Redundancy techniques aim to provide backup and recovery mechanisms that help prevent downtime and ensure seamless operations.

Redundancy refers to the inclusion of additional components, resources, or mechanisms in a computer system to provide backup or redundant design. By implementing redundancy, the system can continue to function even in the event of failure or errors. Redundant systems are designed to be fault-tolerant, meaning that they can withstand failures and continue operating without compromising the overall performance.

There are different levels of redundancy that can be implemented, depending on the specific requirements of the system. Redundancy levels can range from duplicating critical components or resources to creating fully redundant systems with complete replicas. The goal is to ensure that in case of a failure, the redundant system or components can seamlessly take over the operations, minimizing downtime and potential loss of data.

One common example of redundancy is in data backup solutions. By maintaining redundant copies of data, either on different physical devices or in remote locations, organizations can ensure that their data remains safe and accessible even in the event of a failure. Redundancy in data backup allows for the reconstruction and recovery of important information, reducing the risk of data loss.

Contents

What is Redundancy?

In the field of computer science, redundancy refers to the practice of incorporating backup or duplicate components into a system or network. The purpose of this redundancy is to ensure that the system remains functional even in the event of a failure or malfunction. Redundancy levels can vary and are typically implemented based on the desired level of data protection, reliability, and availability.

A redundant system typically includes multiple copies of critical components such as servers, storage devices, or network connections. In the event of a failure, these redundant components can be used to reconstruct or recover the system, ensuring minimal downtime and uninterrupted operation.

Redundancy is especially important in critical computing systems where any downtime can lead to significant financial or operational losses. By implementing redundancy techniques, such as fault-tolerant designs and redundant backups, organizations can protect their data and ensure the continuous operation of their systems.

One common example of redundancy in computer science is a redundant array of independent disks (RAID). In a RAID system, data is distributed across multiple disk drives, allowing for improved performance, reliability, and fault tolerance. In the event of a disk failure, data can be reconstructed from the remaining disks, preventing data loss and minimizing downtime.

Overall, redundancy plays a crucial role in ensuring the reliability and availability of computer systems. By implementing redundant designs and backup solutions, organizations can safeguard their data, minimize the impact of failures, and maintain uninterrupted operation.

Definition of Redundancy

Redundancy, in the context of computer science, refers to the concept of including extra components or resources in a system to improve its reliability and fault-tolerance. It is a technique aimed at minimizing the impact of errors, failures, and downtime in computing systems.

A redundant system is one that has multiple copies of critical components or resources, such as redundant servers or redundant storage devices. The redundancy levels can vary depending on the specific requirements and constraints of the system.

Redundancy techniques are commonly used in data protection and system recovery. By incorporating redundancy in computer science, organizations can ensure the availability of critical data and services even in the event of a failure or malfunction.

One common example of redundancy is the use of backup solutions. These backups provide additional copies of data, enabling its reconstruction and recovery in case of data loss or system failure. Redundant design and fault-tolerant architectures are also employed to increase the reliability and resilience of computing systems.

Overall, redundancy plays a crucial role in ensuring the stability and continuity of computing systems. By implementing redundancy techniques, organizations can minimize the risk of errors, downtime, and data loss, thus improving the overall reliability of their systems.

Importance of Redundancy in Computer Science

In computer science, redundancy is a critical concept that plays a vital role in ensuring the reliability and availability of systems. Redundancy refers to the duplication of components or data in a system, providing additional layers of protection against failures and errors.

One of the key reasons why redundancy is important is data protection. By having multiple copies of important data, a redundant system can ensure that even if one copy is lost or corrupted, the data can be reconstructed or recovered from another source. This helps prevent data loss and minimizes the impact of an error or failure.

Redundancy also enhances system availability. Downtime can be detrimental to businesses and organizations, causing financial losses and customer dissatisfaction. By implementing redundancy techniques, such as backup solutions or redundant designs, a system can continue to operate even if one component fails, ensuring uninterrupted service and minimizing downtime.

Another crucial aspect of redundancy is reliability. A redundant system is less prone to failure because it can distribute the workload across multiple components or nodes. If one component fails, the system can seamlessly switch to a backup component, reducing the risk of a complete system failure. This enhances the overall reliability and performance of the system.

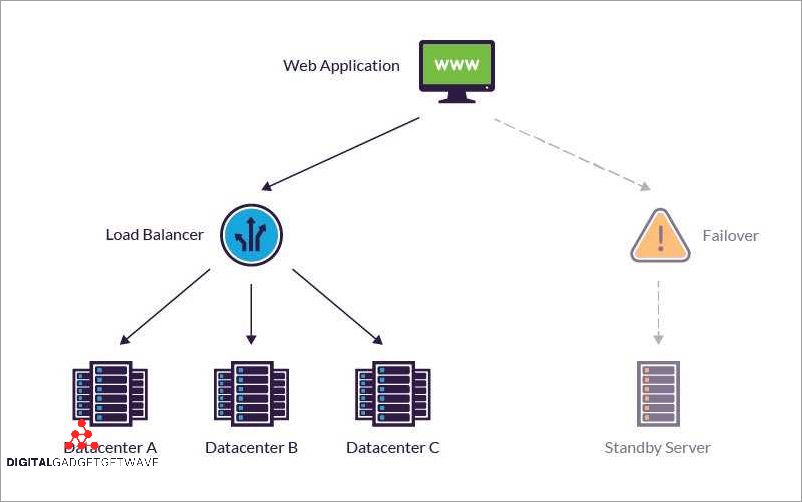

Furthermore, redundancy can also improve the speed of recovery in case of a failure. Systems with redundant components can perform automatic failovers, where the workload is automatically shifted to a backup component without manual intervention. This reduces the time required to recover from a failure and minimizes the impact on users or customers.

In conclusion, redundancy in computer science is of paramount importance for data protection, availability, recovery, and overall system reliability. By implementing redundancy techniques and designs, organizations can ensure that their systems are resilient to failures and errors, providing a seamless and uninterrupted computing experience.

Types of Redundancy

Redundancy is a crucial concept in computer science that helps ensure the availability and reliability of systems. There are several types of redundancy that are commonly employed in computing systems.

1. Redundant System: This type of redundancy involves having multiple identical systems in place to handle computing tasks. If one system fails, the redundant design ensures that another system can take over seamlessly, minimizing downtime and maintaining system availability.

2. Redundant Data: Redundancy in data protection involves creating backups of important information to guard against data loss in the event of a system failure. These backups can be stored locally or in remote locations, providing an extra layer of protection.

3. Fault-Tolerant Design: Systems with fault-tolerant designs are built to withstand failures and continue operating without interruption. This can involve employing redundant components, such as redundant power supplies or network connections, to ensure continuous availability.

4. Redundancy Techniques: There are various techniques used to achieve redundancy in computing systems. This can include mirroring, where data is duplicated on multiple devices, or using RAID (Redundant Array of Independent Disks) to distribute data across multiple disk drives for increased performance and fault tolerance.

5. Recovery and Reconstruction: In the event of a failure, recovery and reconstruction techniques can help restore the system to its normal operating state. These techniques often involve utilizing the redundant data or systems to rebuild and restore lost or corrupted information.

6. Redundancy Levels: Redundancy can be implemented at different levels within a system, from the hardware level, where redundant components are used, to the software level, where redundant processes or algorithms are employed. Determining the appropriate level of redundancy depends on factors such as the criticality of the system and the desired level of availability.

Overall, redundancy in computer science plays a vital role in ensuring system reliability, availability, and data protection. By implementing redundant systems, designs, and techniques, organizations can minimize the impact of failures and reduce downtime, ultimately leading to improved system performance and user satisfaction.

Hardware Redundancy

Hardware redundancy is an essential aspect of creating a redundant system in computer science. It ensures data protection and enhances the overall reliability of the computing environment. Redundancy in hardware involves the use of multiple components or systems to provide backup solutions and minimize the risk of system failure. This redundancy allows for fault-tolerant and high availability systems, reducing downtime and ensuring continuous operation.

There are different redundancy levels that can be implemented in hardware systems. These levels include component redundancy, where redundant components are used in a system for backup and reconstruction purposes. Additionally, system redundancy involves employing redundant systems with identical or similar functionalities to provide backup in case of failure.

Redundancy techniques utilized in hardware include data redundancy, which involves duplicating data across multiple storage devices or servers. This duplication ensures that even if one device or server fails, the data can still be retrieved from another source, minimizing the risk of data loss. Another technique is the use of redundant design, where critical components are duplicated or triplicated to ensure the system’s reliability and to detect and correct errors.

Hardware redundancy plays a crucial role in disaster recovery and business continuity planning. By implementing redundant systems and backup solutions, organizations can quickly recover from hardware failures or system errors, leading to minimal disruption and improved recovery time. The redundancy provided by multiple hardware components or systems increases the overall reliability and availability of the computing environment, ensuring smooth operations even in the face of failures or errors.

In summary, hardware redundancy is an essential aspect of ensuring data protection, fault-tolerance, and high availability in computer science. By employing redundant components and systems, organizations can enhance the reliability and resilience of their computing environment. Redundancy techniques such as data redundancy and redundant design minimize the risk of system failures and ensure consistent operations. Implementing hardware redundancy is crucial for organizations aiming to minimize downtime and maximize the reliability of their computing infrastructure.

Software Redundancy

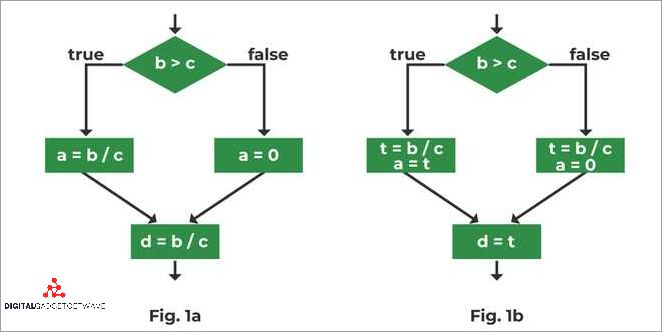

Software redundancy is an essential concept in computer science and data protection, aimed at ensuring the reliability and availability of computing systems. It involves the implementation of redundant components or processes that can mitigate the impact of failures and minimize downtime. By employing redundancy in design, organizations can greatly enhance the fault-tolerant capabilities of their systems and prevent significant disruptions caused by hardware or software failures.

One of the key aspects of software redundancy is data protection and recovery. Redundant systems are designed to store multiple copies of critical data, allowing for seamless reconstruction in the event of an error or failure. This redundancy level ensures that data remains intact and accessible even in the face of technological or operational challenges.

A common approach to achieving redundancy in computer science is through the use of a backup solution. This involves regularly creating copies of critical data and storing them in separate locations or systems. In the event of a failure, the backup can be used to rapidly restore normal operations and minimize the impact on user experience. Additionally, redundant designs often include fallback mechanisms that automatically switch to backup components or processes when failures occur, enabling uninterrupted operation.

Software redundancy can offer various levels of redundancy, depending on the specific needs and requirements of an organization. For instance, a system may employ a form of redundancy called mirroring, where data is simultaneously written to multiple locations for immediate redundancy. Alternatively, some systems may use a technique called RAID (Redundant Array of Independent Disks), which combines multiple physical storage devices into a single logical unit to increase fault tolerance and performance.

Overall, software redundancy plays a critical role in improving the reliability and availability of computing systems. It ensures that failures or errors do not result in significant disruptions or data loss. By implementing redundant designs and backup solutions, organizations can minimize downtime, enhance reliability, and provide uninterrupted service to their users.

Data Redundancy

Data redundancy is a crucial concept in computer science that plays a significant role in ensuring data protection and reducing system downtime. In computing, data redundancy refers to the duplication of data within a system or across multiple systems. By having redundant copies of data, a system can minimize the risk of data loss in the event of a failure or error.

A redundant system is designed to provide high availability and reliability by utilizing redundancy techniques. These techniques involve storing multiple copies of data in different locations or using redundant components to ensure continuous operation. This fault-tolerant approach allows the system to continue functioning even if certain components fail. Redundancy in computer science is an essential aspect of ensuring data integrity and system stability.

One of the primary benefits of data redundancy is the ability to recover data in the case of a failure or error. By having redundant copies of data, the system can reconstruct the lost or corrupted data from the remaining copies. This improves the overall availability and reliability of the system, as it reduces the risk of data loss and minimizes the impact of failures.

There are different levels of redundancy that can be implemented depending on the specific requirements of a system. Redundancy can be achieved at various levels, such as data level, hardware level, or network level. Each level of redundancy provides an additional layer of protection and ensures the system’s ability to recover from errors and failures.

A redundant design includes features such as backup solutions, redundant components, and failover mechanisms. Backup solutions involve regularly creating copies of data and storing them in separate locations. Redundant components, such as redundant power supplies or storage devices, ensure that the system can continue operating even if one component fails. Failover mechanisms automatically switch to redundant systems or resources when a failure occurs, resulting in minimal downtime and uninterrupted operation.

In conclusion, data redundancy is a critical aspect of computer science that focuses on ensuring data protection, improving system availability, and reducing downtime. By implementing redundancy techniques and adopting a redundant design, systems can be more reliable, resilient, and capable of recovering from failures or errors.

Benefits of Redundancy

Redundancy is a crucial concept in computer science that offers several benefits for data protection and system reliability. By implementing redundancy techniques, organizations can ensure the availability and fault-tolerant operation of their computing systems.

One of the primary benefits of redundancy is the ability to have backup solutions in place. Redundant systems provide a safety net in case of failure, preventing potential downtime and ensuring continuous operation. In the event of an error or failure, redundant designs allow for the automatic reconstruction of data or services, minimizing the impact on users and reducing the risk of data loss.

In addition to data protection, redundancy also increases the availability of computing systems. With redundant components, such as servers or network connections, the system can continue to operate even if one or more components fail. This improves the overall reliability of the system, reducing the likelihood of service interruptions and enhancing the user experience.

There are different levels of redundancy that organizations can implement depending on their specific needs. For example, they can use hot standby redundant systems, where a backup system is always ready to take over in case of failure, or cold standby systems, where the backup system needs to be manually activated. The choice of redundancy level depends on factors like cost, system requirements, and the importance of maintaining uptime.

Overall, redundancy in computer science plays a critical role in ensuring the reliability and availability of computing systems. By implementing redundant designs and backup solutions, organizations can protect their data, minimize downtime, and provide a seamless user experience.

Improved System Reliability

In computer science, redundancy is a key concept in ensuring the reliability and availability of a system. By implementing redundant components and backup solutions, system failures can be mitigated or even eliminated. Redundancy can be achieved through various levels, such as hardware redundancy, software redundancy, and data redundancy.

Hardware redundancy involves the use of duplicate components in a system, ensuring that if one component fails, another can take over its functionality. This redundant design helps to minimize downtime and allows for seamless operation even in the event of a hardware failure. Redundancy techniques, such as RAID (Redundant Array of Independent Disks), can provide fault-tolerant storage solutions with data protection and recovery capabilities.

Software redundancy involves the use of redundant software components to ensure continuous operation in the face of errors or failures. This can involve implementing multiple instances of critical software services, allowing for automatic failover in case one instance encounters an error. These redundancy levels can greatly enhance the availability and reliability of the system.

Data redundancy plays a crucial role in ensuring data integrity and availability. By storing multiple copies of data across different storage devices or locations, the risk of data loss due to hardware failures or disasters can be reduced. In the event of a failure, data can be reconstructed from the redundant copies, ensuring that critical information remains accessible.

Overall, implementing redundancy in computer science is essential for improving system reliability. Whether it is through hardware redundancy, software redundancy, or data redundancy, redundant systems can minimize downtime, enhance availability, and provide reliable computing resources. By incorporating redundant designs and backup solutions, organizations can achieve a robust and fault-tolerant infrastructure that can withstand failures and continue to operate efficiently.

Enhanced Fault Tolerance

In computing, fault tolerance refers to a system’s ability to continue functioning properly in the presence of errors or failures. One important aspect of fault tolerance is enhanced availability, which aims to minimize downtime and ensure continuous operation even in the event of a failure.

A redundant system is commonly used to enhance fault tolerance. In a redundant design, multiple components or resources are duplicated to provide backup in case of failure. This redundancy helps to mitigate the impact of a single failure, as the redundant components can take over the failed ones and keep the system operational.

There are various redundancy techniques employed in computer science to improve fault tolerance. One such technique is data protection through redundancy, where data is duplicated and distributed across multiple storage devices or systems. This ensures that even if one storage device fails, the data can be reconstructed or recovered from the redundant copies.

Redundancy can be implemented at different levels, such as hardware redundancy and software redundancy. Hardware redundancy involves having duplicate hardware components, such as servers, network connections, or power supplies, that can take over in case of failure. Software redundancy, on the other hand, involves duplicating or replicating critical software processes or modules to ensure uninterrupted operation.

A fault-tolerant system with enhanced fault tolerance and redundancy is crucial for applications that require high reliability, such as mission-critical systems, telecommunications networks, or financial transaction processing. By implementing redundancy techniques, organizations can significantly improve the reliability and availability of their systems, reducing the risk of downtime and ensuring continuous operation even in the face of errors or failures.

Minimized Downtime

Availability is a key factor in the success of any system. In computer science, redundancy plays a crucial role in achieving high availability. A redundant system refers to the design of a system that incorporates backup solutions and redundancy techniques to prevent failures and minimize downtime.

Redundancy in computer science is achieved through several levels of redundancy. A redundant design involves duplicating critical components or systems, ensuring that if one fails, another takes over seamlessly. This fault-tolerant approach allows for uninterrupted operation and minimizes the impact of failures.

In the event of a failure, an effective recovery and reconstruction mechanism is essential. Redundant systems are capable of quickly detecting and isolating errors, minimizing the time required to restore operations. This is particularly important in computing environments where downtime can result in significant financial losses or compromise data protection.

By implementing redundancy, organizations can enhance the reliability and availability of their systems. Redundant designs provide a safety net against hardware or software failures, ensuring continuous operations even in the face of potential disruptions.

Overall, minimizing downtime through redundancy is a critical aspect of computer science. Redundant systems not only protect against failures but also contribute to the overall resilience and efficiency of computing environments.

Challenges and Considerations

When it comes to redundancy in computer science, there are several challenges and considerations that need to be taken into account. One of the main challenges is dealing with system failures. A failure can occur at any time and can lead to the loss of important data and disruption of services. To mitigate this risk, redundancy is often implemented.

Redundancy in computing involves having multiple components or systems that can perform the same task. This redundancy helps to ensure that if one component or system fails, another can take over and continue the operation. However, implementing redundancy is not without its own challenges. One challenge is determining the appropriate level of redundancy needed. Too little redundancy can leave a system vulnerable to failure, while too much redundancy can be costly and inefficient.

Another consideration when it comes to redundancy is the selection of the appropriate redundancy technique. There are different redundancy techniques that can be used, such as data replication, data mirroring, and error coding. Each technique has its strengths and weaknesses and needs to be carefully chosen based on the specific requirements of the system.

One important aspect of redundancy is data recovery and backup solutions. When a failure occurs, it is crucial to have a backup solution in place to ensure the availability of services and the ability to recover lost data. This can involve regular backups, offsite backups, or even real-time replication of data.

In addition to data recovery, another consideration is the design of a redundant system. A redundant design ensures that a failure in one component or system does not lead to the complete shutdown of the system. Instead, the redundant components or systems can take over and continue the operation. This design also helps to improve the reliability of the system and minimize downtime.

In conclusion, redundancy in computer science is an important concept that helps to ensure the availability and reliability of systems. However, implementing redundancy comes with its own challenges and considerations. These include determining the appropriate level of redundancy, selecting the right redundancy techniques, and implementing effective data recovery and backup solutions. By addressing these challenges and considerations, organizations can create fault-tolerant systems that can withstand failures and ensure the continuity of operations.

Cost and Complexity

Implementing redundancy in computer science involves additional cost and complexity. The reliability and recovery capabilities provided by redundancy come at a price, both in terms of financial investment and system complexity.

One of the primary costs associated with redundancy is the investment in backup and recovery solutions. Redundancy requires the creation and maintenance of duplicate systems, which can be expensive. Additionally, the implementation of redundancy often requires the purchase of specialized hardware and software to support the redundant design.

Moreover, redundancy increases the complexity of a system. The creation of redundant components and backup systems involves intricate configurations and coordination. This complexity can make it challenging to manage and troubleshoot the system effectively.

Despite the added cost and complexity, redundancy is crucial for ensuring high availability and minimizing downtime. In the event of a failure or error, redundant design allows for quick and efficient recovery. Redundancy techniques, such as data replication and mirroring, provide continuous access to critical data and minimize the impact of system failures.

Overall, the cost and complexity associated with redundancy in computer science are justified by the benefits of increased reliability, availability, and data protection. While the implementation may require significant investment and technical expertise, the advantages of a fault-tolerant and redundant system outweigh the challenges.

Maintenance and Monitoring

Maintenance and monitoring play a crucial role in ensuring the effectiveness of redundancy levels in a computer system. A failure in any component can lead to system downtime, which can be costly for businesses. Therefore, it is essential to implement fault-tolerant measures to minimize the impact of system failures.

Monitoring the system regularly helps in identifying any potential faults or errors. By detecting issues early, it becomes easier to rectify them before they cause any significant disruptions. Proactive monitoring also allows for real-time error detection and prevention, leading to improved system reliability.

One of the key aspects of maintenance and monitoring is data protection. Redundant design and redundancy techniques are implemented to ensure data availability and integrity. Regular backups are taken to prevent data loss in the event of a failure. These backups act as a fail-safe mechanism, allowing for quick recovery and reconstruction of the system.

Having a reliable backup solution in place is critical for maintaining the availability of a redundant system. It enables swift recovery in case of any catastrophic failure or disaster. Regular testing of the backup solution ensures its effectiveness and readiness for use during an actual failure.

Maintenance and monitoring also involve keeping track of system performance and efficiency. This includes monitoring resource utilization, identifying potential bottlenecks, and optimizing system configurations. By constantly monitoring system health, organizations can address any issues promptly, minimizing downtime and maximizing system availability.

| Benefits of Maintenance and Monitoring |

|---|

|

Trade-offs and Limitations

When it comes to implementing redundancy in computer science, there are several trade-offs and limitations that need to be considered. One of the main trade-offs is the increased cost associated with redundancy. Implementing redundancy levels can be expensive, requiring additional hardware and software investments.

Another trade-off is the complexity of the fault-tolerant system. Redundant designs can be intricate, making it difficult to maintain and troubleshoot in case of a failure. The redundancy techniques employed must be carefully chosen to strike a balance between system complexity and availability.

While redundancy helps in improving system reliability and recovery, it is important to note that it does not eliminate the possibility of errors altogether. Redundancy does not guarantee error-free operation, as a fault in one part of the redundant system can still affect other parts. Therefore, it’s crucial to regularly test the effectiveness of the redundancy measures and have a backup solution in place.

Redundancy also has its limitations in terms of downtime. Although a redundant system can provide a quick recovery from a failure, there may still be a certain amount of downtime required for the reconstruction or data recovery process. This downtime can impact the availability and performance of the system, leading to potential disruptions in computing operations.

Overall, while redundancy in computer science can significantly improve the availability and reliability of a system, it is essential to carefully consider the trade-offs and limitations associated with its implementation. By weighing the costs, complexity, and potential downtime, organizations can make informed decisions about incorporating redundancy into their computing infrastructure.

FAQ about topic “Understanding Redundancy in Computer Science: A Comprehensive Guide”

What is redundancy in computer science?

Redundancy in computer science refers to the inclusion of extra information or resources in a system in order to improve its reliability, availability, or performance. It is a technique used to ensure that the system can continue to function even if certain components fail or unexpected events occur.

Why is redundancy important in computer systems?

Redundancy is important in computer systems because it helps to prevent system failures and minimize downtime. By having redundant components or resources, the system is able to continue operating even if there is a failure in one part. This helps to ensure the reliability and availability of the system, which is especially crucial for mission-critical applications or systems.